CogChar - Cognitive Character Software

CogChar is an open source cognitive character technology platform.

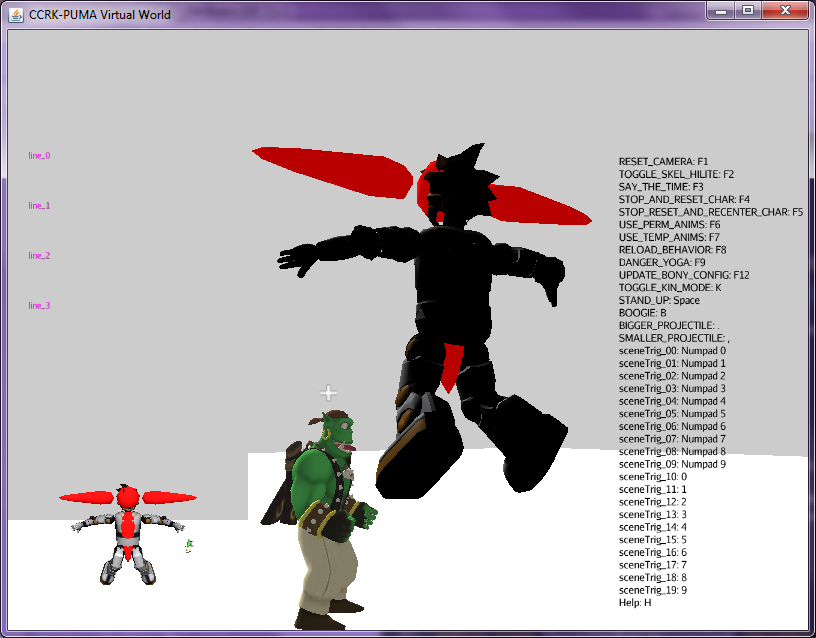

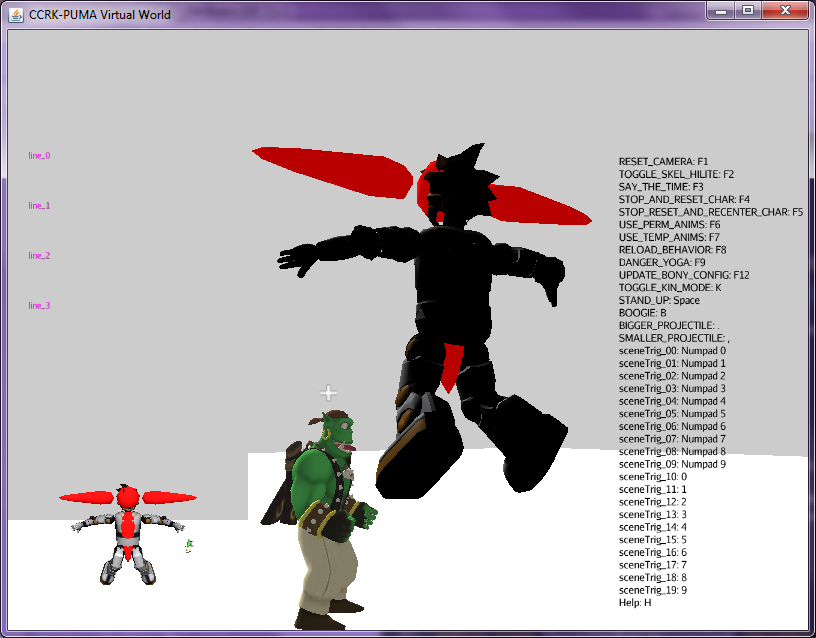

Our characters may be physical (robots), virtual, or both.

Applications include therapeutics, education, research, and fun!

The CogChar project is Java based, but network integratable, connecting several other open source projects.

Coghcar provides an important ingredient of the Glue.AI software recipe.

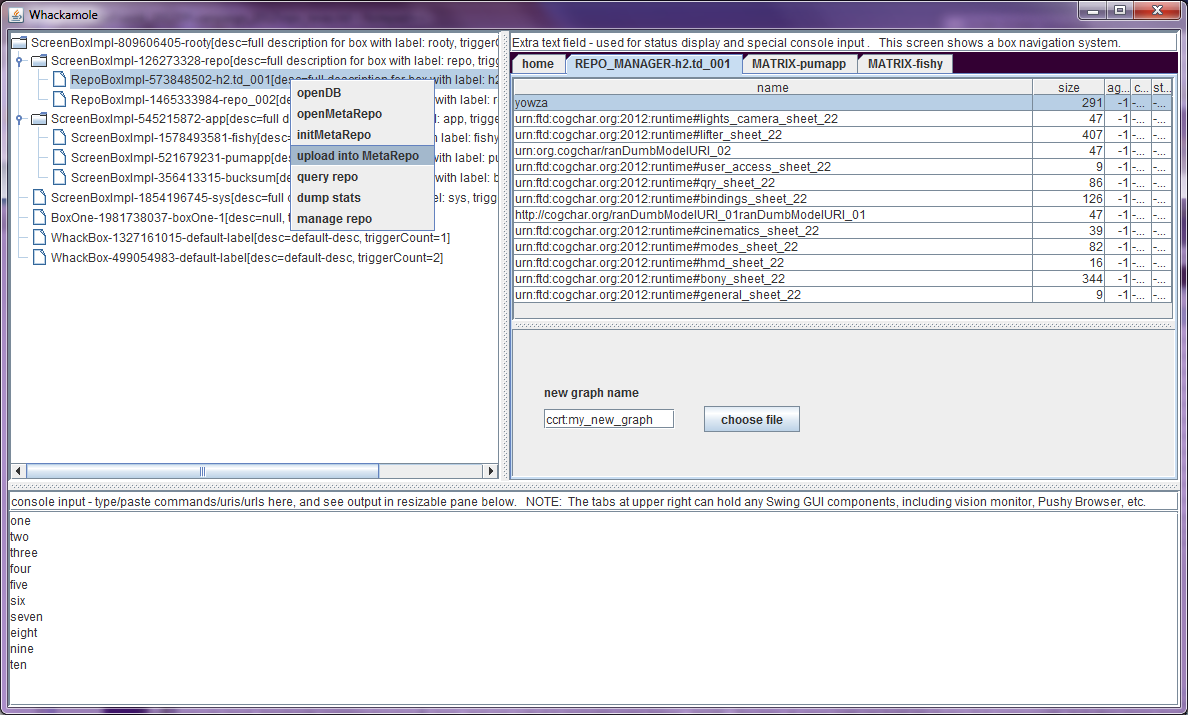

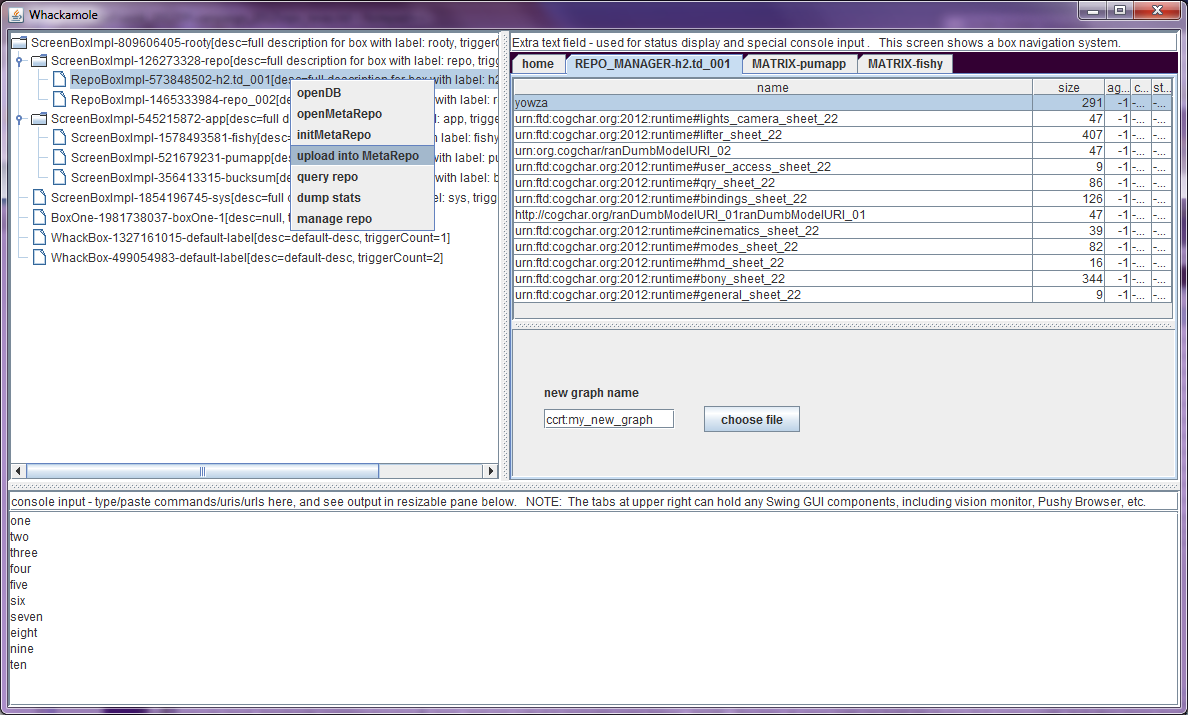

CogChar provides a set of connected subsystems for experimental, iterative character creation.

We aim to engineer a highly integrated yet modular system that allows many intelligent humanoid character features to be combined as freely and yet robustly as possible.

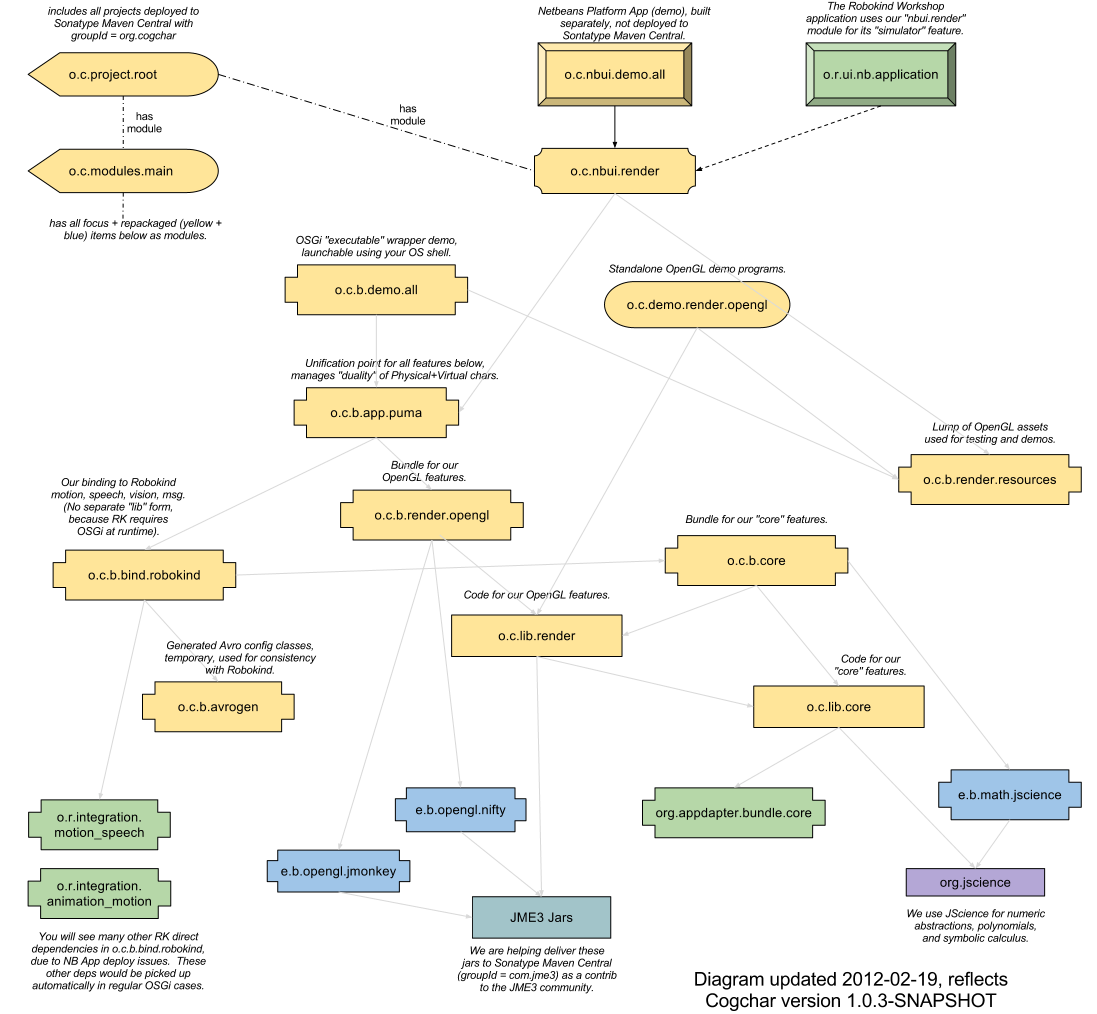

We achieve robust modularity using the OSGi standard for Java applications, although our individual features can often be run outside of OSGi.

Our outlook for Android compatibility is very bright, although we can't control or predict what Oracle and Google will do in coming years.

We directly support networking over HTTP and AMQP.

Cogchar by itself is primarily a set of bundles used to assemble your character application.

We do have some runnable demonstrations included in our bundles.

Here is a spreadsheet summary of our user interface components and Glue.AI containers.

External Features

Many of our subsystems come to us thru another open source software project (many of which are part of the Glue.AI effort). Specifically, Cogchar has the following main external ingredients:

- Humanoid robotics capabilities of MechIO open source robotic device layer, including APIs for servos, sensors, animation, vision, and speech.

- RDF/SPARQL/OWL semantic software stack of the Appdapter project (which itself is primarily dependent on

Jena)

- 3D character rendering to OpenGL using JMonkey Game Engine, LWJGL, BulletPhysics

- Web interaction using the Lift framework (combine or replace with your own bundled webapp using PAX-WEB launcher).

After reading this list, it's perhaps rather obvious that one of the main goals of the Cogchar project is to achieve a useful simulation duality between real robotics worlds in the MechIO space, and various onscreen 3D OpenGL worlds. We seek to do that as flexibly as possible, with the definition of world mappings occuring in the semantic space, with minimal need for software extensions.

(Images of Zeno Robot are copyright by RoboKind Robots, and are used here with permission. Please do not reproduce without permission from RoboKind Robots).

Internal Feature Goals

- Flexible behavior authoring, editing, sharing, and triggering.

- Variable, authorable, testable mappings between various character and world coordinate systems, as well as between symbolic spaces.

- Fusion of sensor and symbol streams, applied to self-image and model of world.

- Character sees, hears, touches and knows in many related symbolic and physical/virtual/numeric dimensions.

- My left-eye camera sees some pixels, some of which are recognized as a face, which may be matched to a name, which I may choose to say, producing a certain sound...

- I am standing on a floor, so my feet feel pressure from the floor. I am holding my torso erect above my legs, and my head above my torso. I am looking at a person called Samantha. I can describe these facts to you in spoken words, and you can also see these facts reflected in my physical/virtual display, because they are coming from the same world-image, built from the current fused estimate of all available information (both physical and symbolic).

- Blended adaptive estimators and controllers

- Run a wide variety numeric and symbolic learning algorithms(bayesian, HMM, neural, genetic, you name it),

using Java, Scala, R, Weka, Hadoop, DL-Learner.

- Metadata-driven analysis and update of 1) immutable trajectory logs (pruned) and 2) mutable state vectors (markovian).

- Reasoning and rendering of body and mind

- Interact with visualizations of character's body, mind, and environment, overlapped in interesting ways (what's inside the character's left ear right now?)

- Standard OpenGL 3D display for any compatible device (computer, tablet, smartphone, game console)

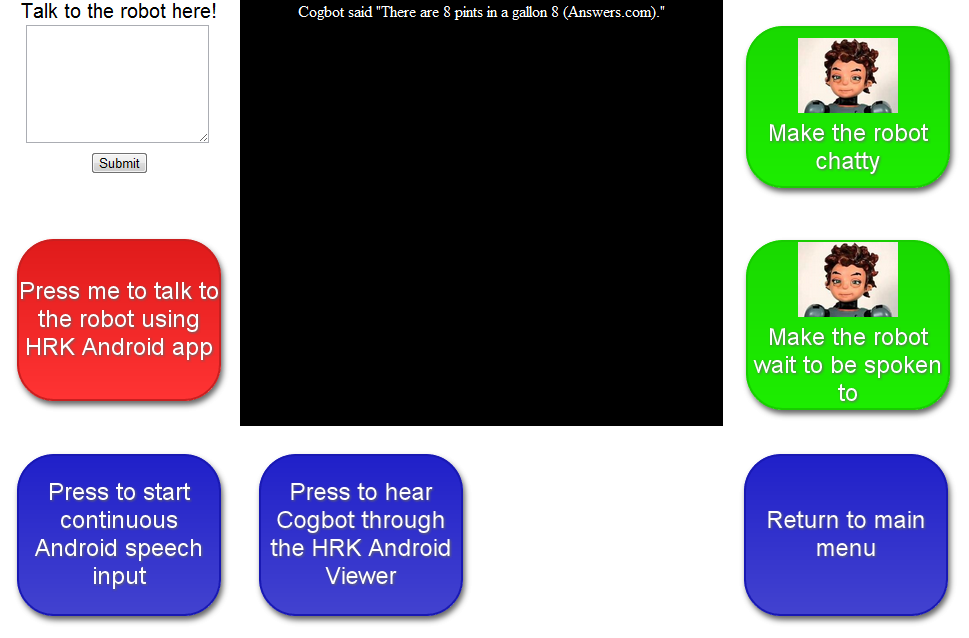

- Ongoing conversation coordination

- Keep track of many conversation partners over any length of time (minutes to years).

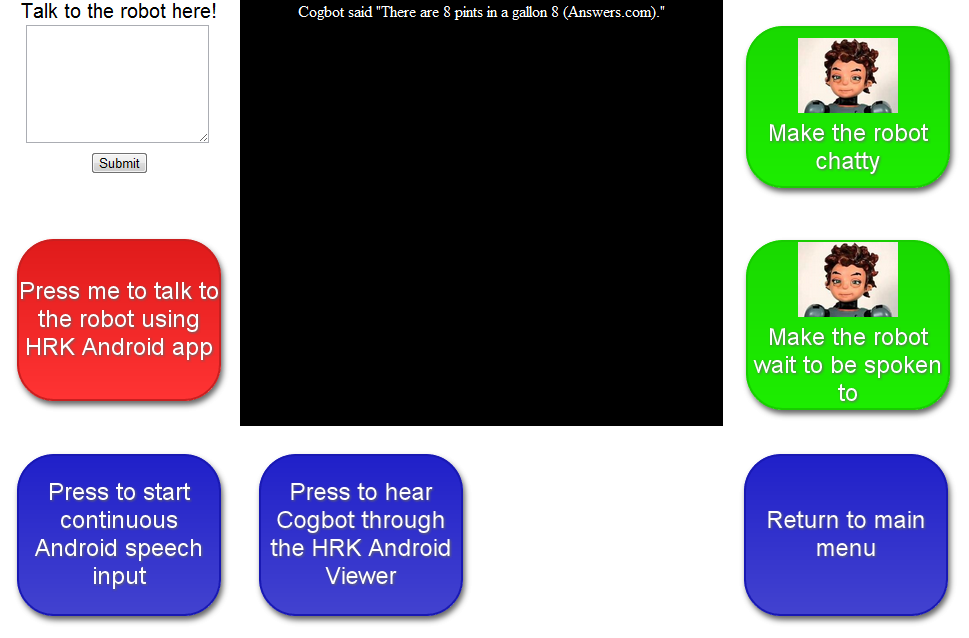

- Consult reference sources during conversations, and decide which ones to mention (feature provided by Cogbot).

- Conversation is integrated with embodiment, self-image, and world model.

Technical Summary

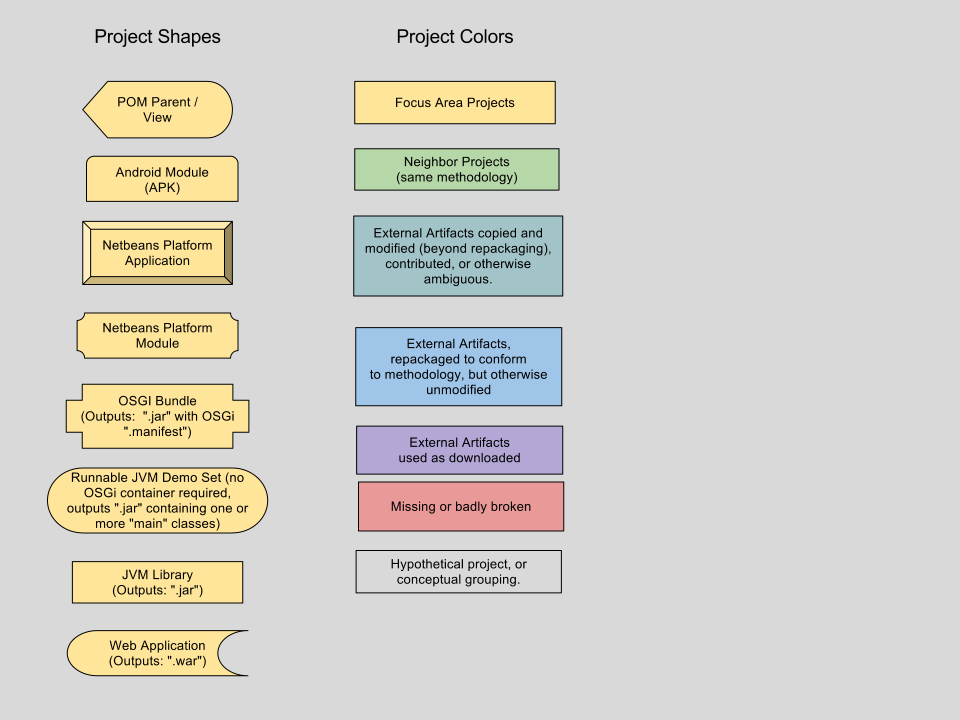

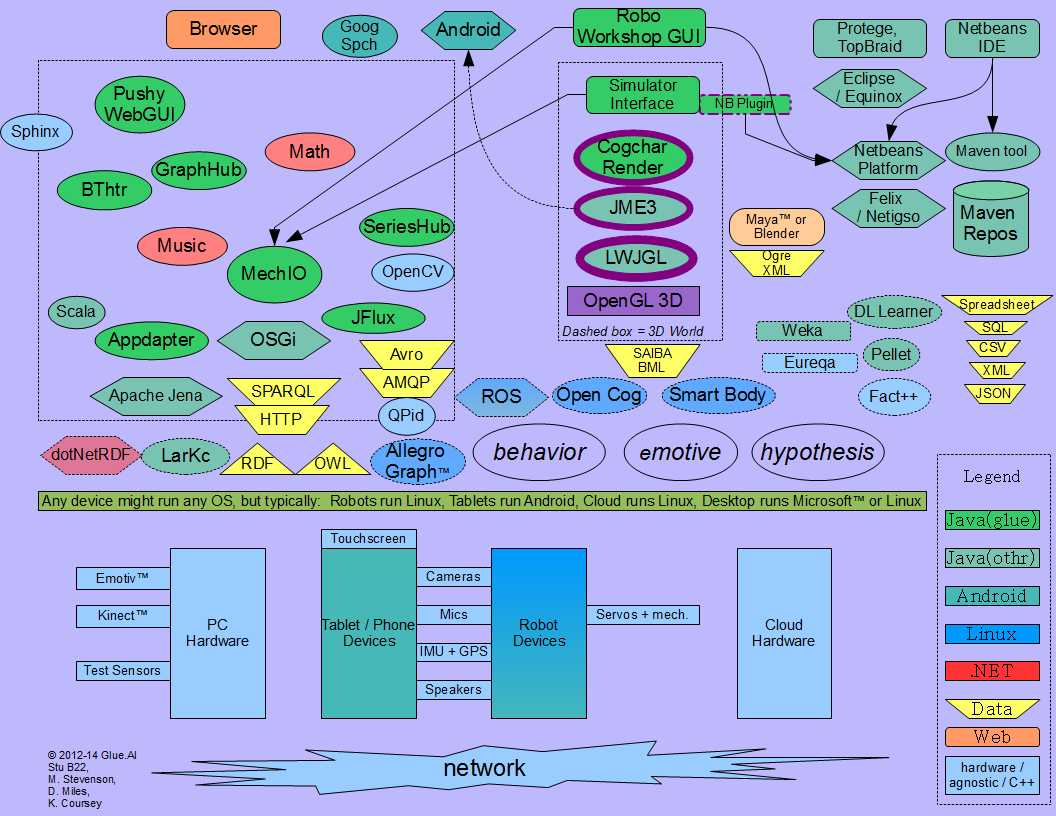

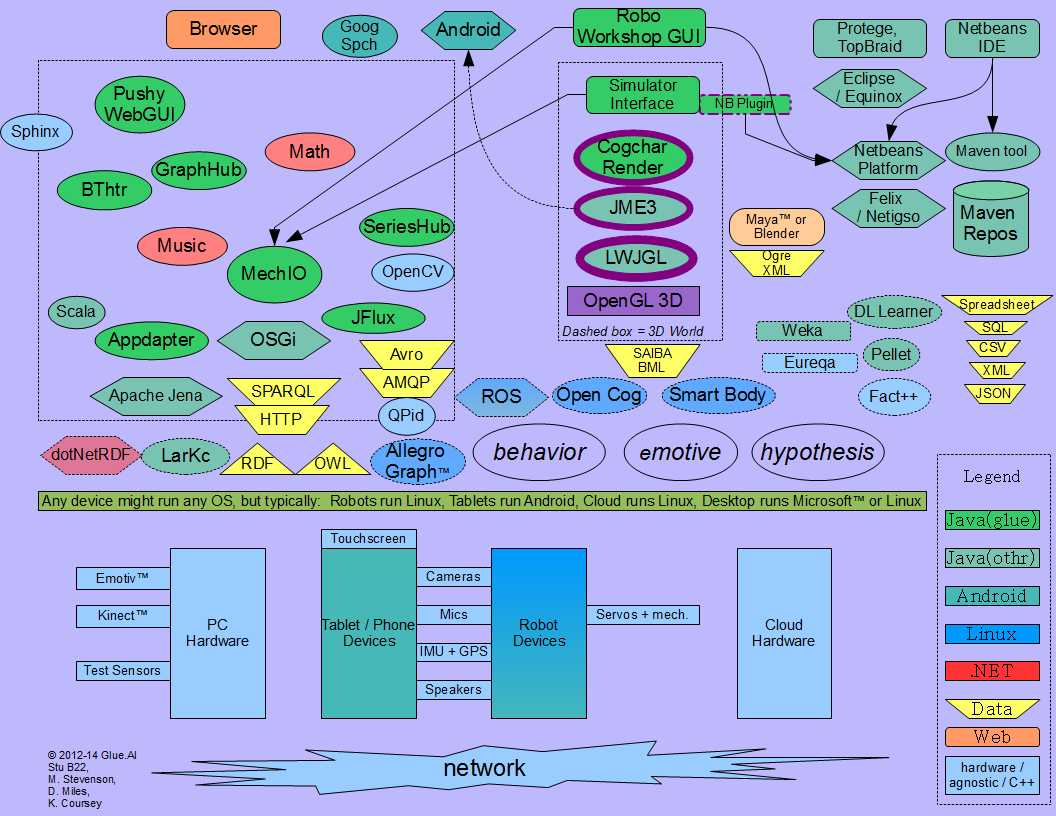

The diagrams below provide a quick visual introduction to Glue.AI vocabulary and structure, in some typical scenarios.

System context/vocabulary diagram, mostly sans connectors, accounting for physical and virtual characters in a variety of deployment kinds.

(Click diagram to enlarge)

|

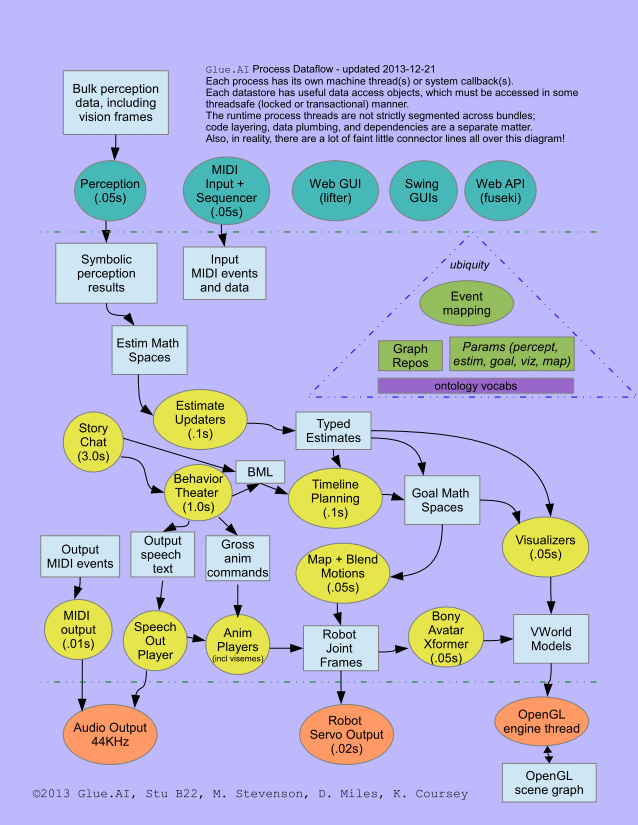

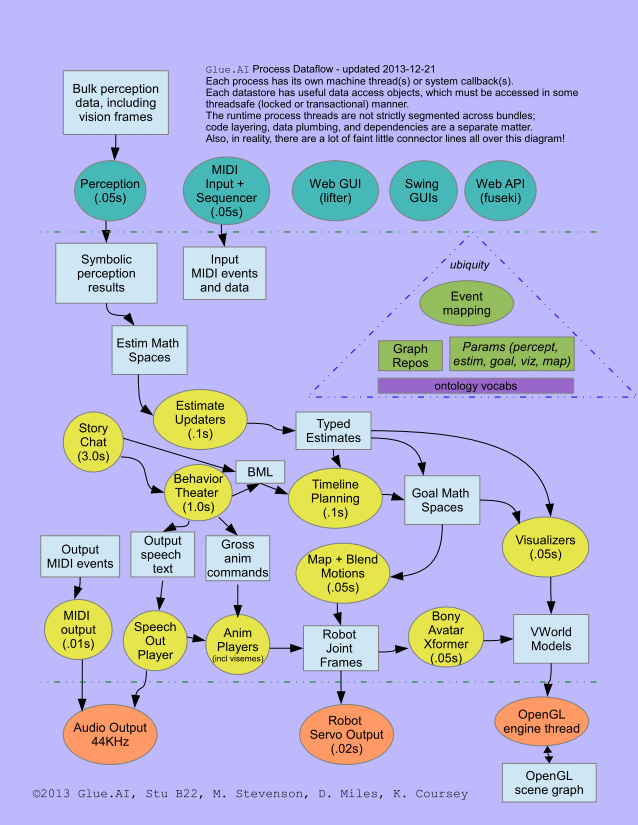

Process dataflow and threading view of a typical Glue.AI character deployment.

(Click diagram to embiggen)

|

- For additional "big picture" perspectives, please see

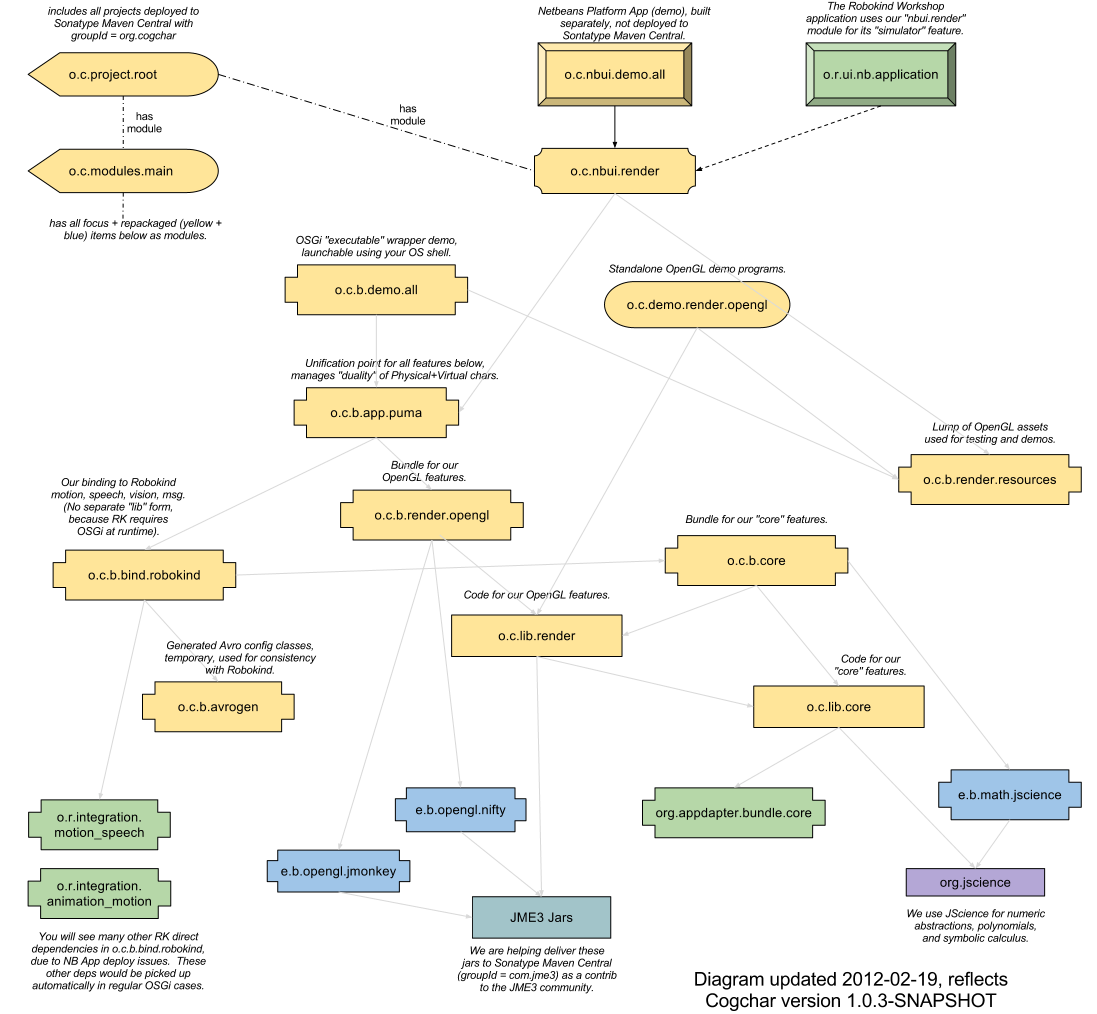

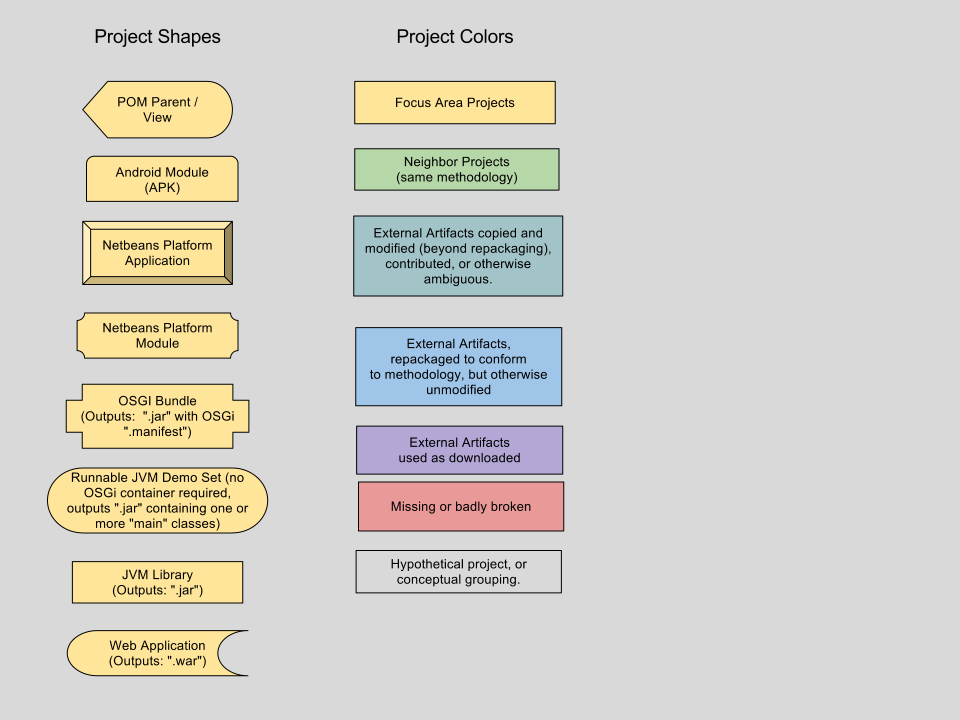

- Dependencies diagram below this bullet list. Here is the diagram legend.

-

Architecture + dependencies

presentation on the experimental Friendularity.org site.

- Core Technology: Java, RDF, OSGi, Scala, Maven

- MechIO - Binding to (open-source + commercial) physical humanoid robot features. CCMIO = Cogchar+MechIO

- Symbolic metadata smarts (RDW/OWL/SPARQL) from Appdapter platform

- 3D character rendering to OpenGL using JMonkey Game Engine, LWJGL, BulletPhysics

- Connection with many popular open source mathematics, AI and robotics tools

- Cogbot - Advanced conversation features, emotion simulation, and links to online virtual worlds

- Integration with MIDI + SMPTE music+video technology for action mapping, triggering, and synchronization

- Serialization to Avro, messaging over SOH,

AMQP, cloud integration via Hadoop

- Planned compatibility with Android platform

For More Information

- Source tree (maven is not strictly required, but makes life easier, for Us!)

- Somewhat old Javadocs on Jarvana, be sure to check version, there is a lag from Maven Central.

- Please visit the Wiki in our workspace.

- Visit the Appdapter, MechIO, and JMonkey-Engine websites, as well as the more general Glue.AIresources.

- You may ask questions and discuss the software using the cogchar-users google group.